The Inception Problem

If you’ve ever had a lengthy conversation with ChatGPT or Claude where you jumped between topics, asked follow-up questions, and provided corrections, you might have noticed the AI starting to get… confused.

Suddenly it’s mixing up information from earlier in your chat. It’s forgetting what you just asked. It’s making up details that never existed.

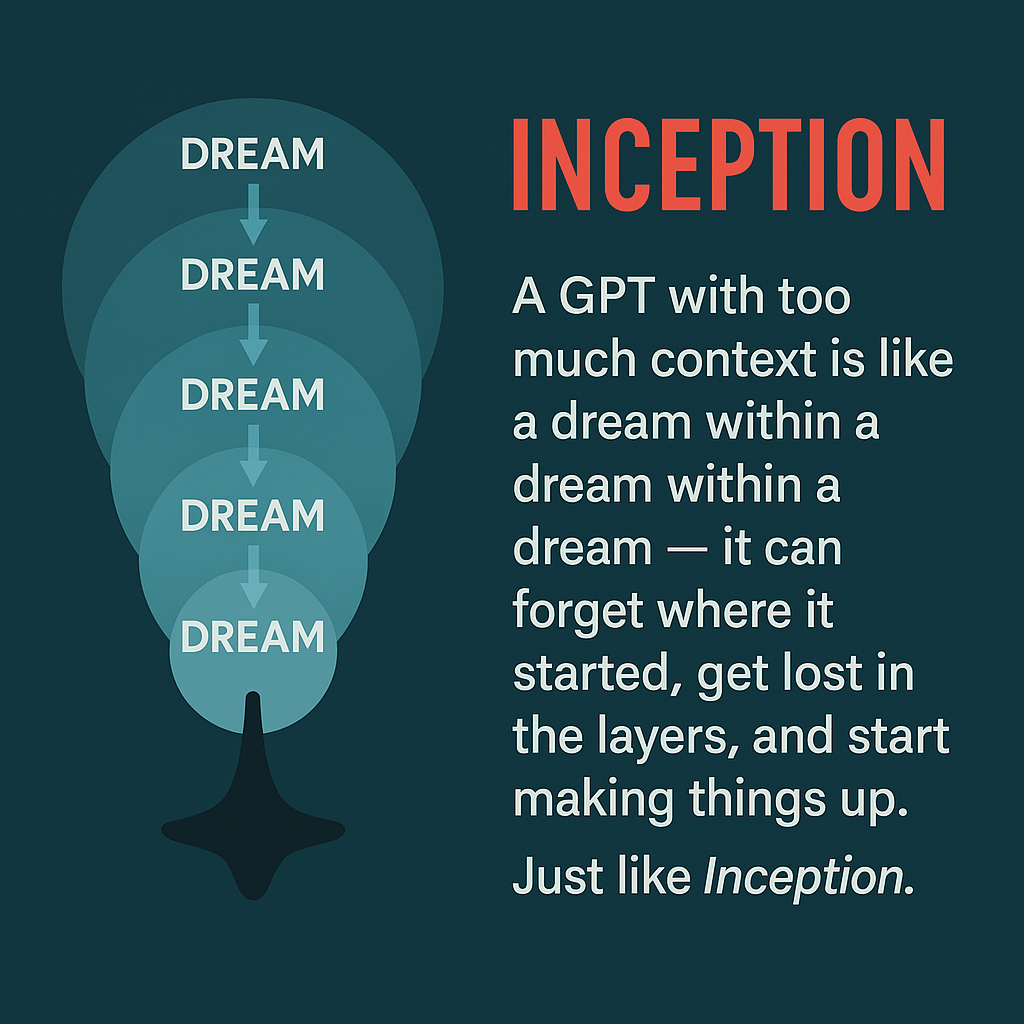

I call this The Inception Problem, and it perfectly explains one of the biggest challenges in working with large language models.

Why Inception Is The Perfect Metaphor

In Christopher Nolan’s Inception (2010), characters enter dreams within dreams, going deeper into layered subconscious realities. Each layer becomes more unstable and distorted the further down they go. Time stretches, logic bends, and it becomes harder to remember what’s real.

This is exactly what happens inside a GPT’s “mind” during a long conversation.

How Context Windows Work (or Don’t)

When a GPT model processes a massive context window — full of previous conversations, instructions, corrections, and data — it’s like going deeper into layers of thought:

- The further down it goes, the harder it becomes to keep track of what’s current, what’s relevant, and what was just a passing idea.

- The model tries to respond from inside all those layers, but like in Inception, the deeper you are, the more likely you are to lose clarity.

- It starts mixing up old information with new, forgetting the “surface” question, or hallucinating details that were never there — just like characters forgetting their original goal in a dream.

The Dev-to-AI-Engineer Lesson

As AI engineers, we need to be conscious of this limitation. Some practical takeaways:

- Keep conversations focused - Don’t mix too many topics in one chat

- Start fresh when switching to a new task or topic

- Use system prompts wisely - They’re part of the context budget too

- Remember that AI doesn’t know what to focus on - Unlike humans who naturally pay more attention to important things, AI models give similar weight to everything in the conversation. That’s why they start mixing things up when conversations get too long or complex.

Understanding these limitations helps us build better AI systems and use existing ones more effectively.